Simulation-Led Product Strategy

Designing for Adaptive Outcomes at Scale

Introduction

As we discussed in previous articles (part 1 & part 2), with AI now integrated across the product lifecycle and product managers stepping into strategic orchestrator roles, teams have unprecedented agility and data at their fingertips. The question then becomes: How do we decide the best course when so many options are available? In a fast-changing, complex environment, intuition and linear planning can fail us. This is where the next frontier of product management emerges: using AI not just to speed up execution or inform decisions, but to simulate the future. Simulation-led product strategy means leveraging AI to model various product scenarios, test multiple “future states,” and optimize strategies before committing resources to build anything in the real world.

Think of it as moving from playing chess one move at a time to being able to explore entire sequences of moves in advance. Rather than planning a roadmap and hoping for the best, a product team can ask, “If we implement feature A for customer segment X, what will happen? How does that compare to focusing on segment Y or building feature B instead?” and get evidence-based answers. AI-driven simulation makes this possible by ingesting everything the team and organization know (data, user behavior models, market trends) and projecting outcomes under different assumptions. The result is a strategy process that’s dynamic, continuous, and adaptive.

Strategy is no longer a static document – it’s an ongoing simulation.

This approach is increasingly viable thanks to advances in AI modeling. Companies in complex domains (finance, logistics, gaming) have long used scenario simulations to make decisions; now product management is tapping into similar techniques. For example, modern AI can create digital twins of user populations or markets – virtual replicas that respond to inputs realistically – to test how those users might react to a new product feature or pricing change. According to McKinsey, digital twin technologies are growing explosively (projected to reach a $73.5B market by 2027, growing ~60% annually) as leaders seek to “interact with or modify a product in a virtual space” because it’s “quicker, easier, and safer than doing so in the real world.” 1. This reflects a broader belief: if you can simulate it, you can de-risk it and optimize it. Product strategy is following that mantra.

In this article, we’ll explore what simulation-led strategy means for product teams. We’ll look at the shift from planning “what to build” to modeling what will work, enumerate key capabilities needed to do this (from autonomous opportunity surfacing to shared simulation environments), and examine how the product manager’s role evolves when they become a sort of “simulator-in-chief.” We’ll also highlight emerging tools and frameworks – like scenario graphs and agent-based modeling – that teams are starting to use for simulation. Finally, we will consider the strategic and cultural implications: when decisions are based on simulated evidence, how does that improve speed and confidence? And how does it set the stage for the even more autonomous, intent-driven product ecosystems discussed in the final article?

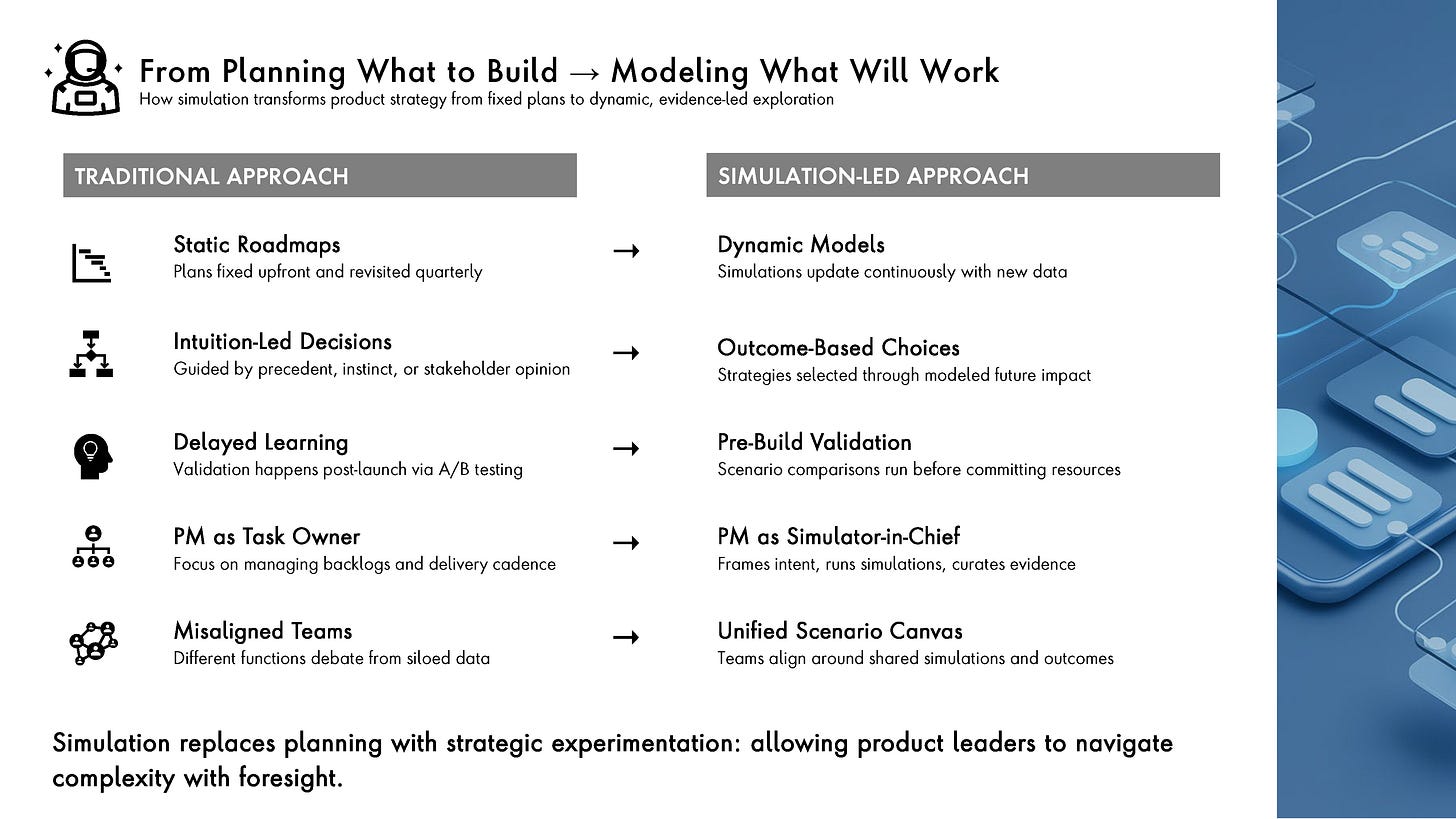

From Planning What to Build ➝ Modeling What Will Work

In a traditional setting, product planning involves roadmaps, requirement docs, and maybe some A/B testing plans post-launch. In a simulation-led approach, the sequence is: Simulate – Compare – Select – Adapt. It’s an iterative loop executed mostly in silico (in the computer) before significant resources are spent in reality.

The product manager’s role evolves from backlog manager to a scenario planner and experimenter. Instead of asking “what features should we deliver this quarter?”, they might ask “what user outcome should we aim for, and which combination of potential features gets us there best?” and then let simulations inform the answer.

Product leaders use simulation to:

Forecast outcomes of various strategies (feature sets, target segments, pricing models, etc.).

Compare competing product bets side by side with quantitative evidence.

Align cross-functional teams around shared evidence of what could work (ending debates by testing assumptions virtually).

Rapidly adjust to changing market or user behavior by rerunning simulations with updated data whenever something shifts.

Put simply, simulation-led strategy brings the scientific method to product strategy: hypotheses ➝ test (simulate) ➝ learn ➝ decide ➝ implement, and repeat. It dramatically reduces reliance on guesswork or hindsight. A team at a major e-commerce company, for instance, could simulate a holiday shopping surge to test if their planned features and infrastructure will hold up and result in the desired sales conversion – before the season begins, allowing them to tweak strategy proactively.

This approach addresses a classic challenge: in product development, by the time you know whether a big decision was right or wrong, you’ve already invested heavily. Simulation allows a sort of time-travel – seeing likely impacts upfront. As one might expect, this increases confidence in decisions.

Teams move forward knowing not just what they plan to do, but why this path is likely better than the alternatives, backed by data from simulations.

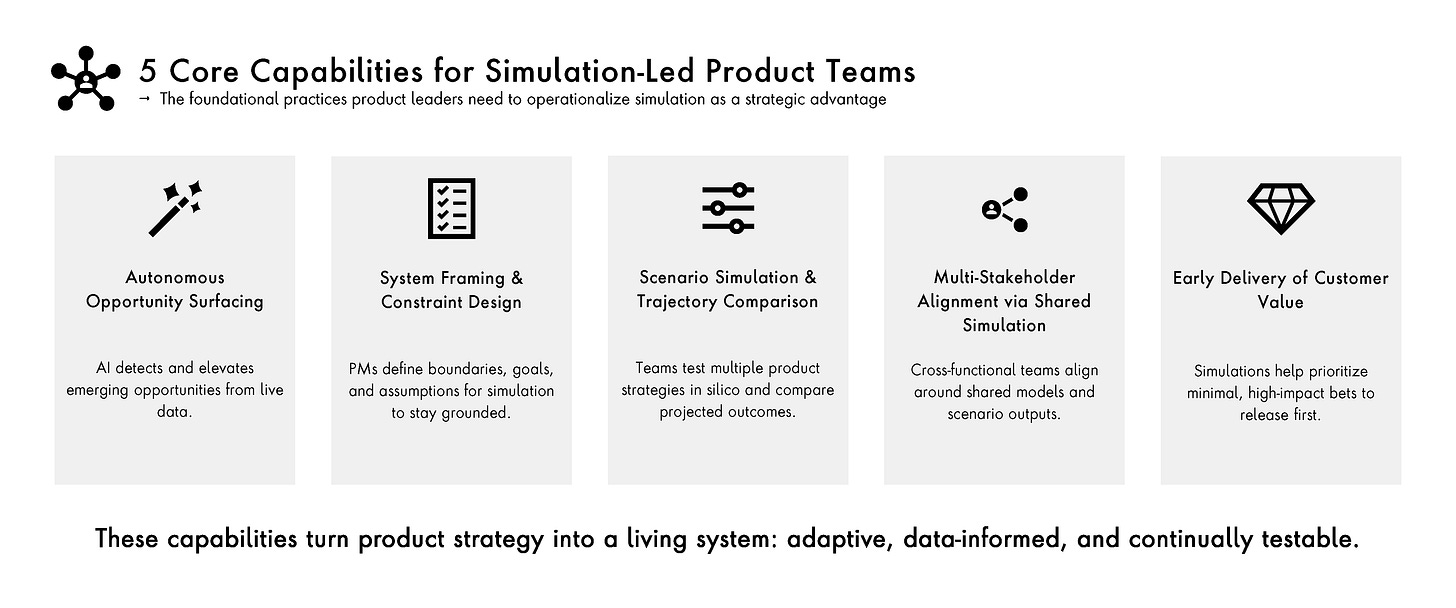

Now, let’s break down the core Capabilities of Simulation-Led Product Strategy – essentially the building blocks required to practice this approach:

1. Autonomous Opportunity Surfacing

What It Is: AI continuously scans real-time data (market trends, user behaviors, competitive moves) to detect product gaps, emerging user needs, or shifts in behavior that could represent opportunities. This is like an ever-vigilant analyst that never sleeps, flagging, “Hey, there’s a notable uptick in users searching for X within our app,” or “Competitor Y’s new release is causing some of our power users to log in less.”

What It Does: Instead of relying on periodic brainstorming or human analysts poring over dashboards, the AI proactively flags and even ranks opportunities. It might score them based on potential impact or alignment with business goals. For example, an AI might identify that “latent demand for a feature to integrate with Zoom is high among enterprise customers” by correlating support ticket mentions and usage patterns – something a human might take weeks to figure out.

Strategic Value: The PM shifts from being an idea hunter to a curator of high-confidence opportunities. When the system serves up vetted possibilities, the team can focus its creative energy on evaluating and refining those, rather than starting from scratch or missing hidden gems. This capability means you’re less likely to be blindsided by a change in user needs or market conditions – the AI will surface it as soon as it’s detectable.

2. System Framing & Constraint Design

What It Is: The product team (led by the PM) explicitly defines the assumptions, objectives, and constraints for the product environment in which simulations will run. Essentially, you set the stage: “Here are the goals (e.g., maximize user engagement without dropping below threshold NPS), here are the assumptions (e.g., our marketing budget is fixed, or user growth will be 5% MoM), and here are the hard constraints (e.g., must comply with GDPR, or cannot exceed infrastructure cost X).”

What It Does: These frames guide the AI’s simulation logic, ensuring the scenarios generated are realistic and relevant. If you don’t provide a frame, simulations could wander into meaningless territory. For instance, without constraints, a simulation might suggest a strategy that yields high engagement but violates privacy – which is a non-starter. By framing properly, you channel the AI to explore within meaningful bounds. This is analogous to setting the rules of a game before letting players (or AI agents) loose.

Strategic Value: Product strategy becomes a guided exploration space rather than a fixed plan. PMs essentially design a strategic sandbox: within it, AI and teams can try various moves, but all stay aligned to the overarching purpose and limitations. This ensures creativity doesn’t go off the rails. It also makes strategy discussions concrete – instead of abstract “what ifs,” you define what success means and let the simulations show how to get there, or if it’s even feasible under the constraints. It turns strategy into a design exercise: designing the system that will find the winning strategy.

3. Scenario Simulation & Trajectory Comparison

What It Is: AI models simulate the outcomes of different product decisions and external conditions. This can include launching or not launching certain features, targeting different user segments, responding to competitor moves, or even macro changes (like a sudden surge in traffic or a new platform trend). Essentially, each scenario is a possible future of your product and market, which the AI plays out.

What It Does: PMs and teams can evaluate potential futures side by side. For example, a simulation might answer “If we launch Feature X for Segment Y next quarter, what happens to 6-month user retention and LTV?” versus “If we instead improve Feature Z for our core segment, what are those metrics?” The simulation engine would use available data (user behavior models, adoption curves, etc.) to project these outcomes. It’s important to note these are probabilistic, not certainties, but they significantly improve upon pure speculation. Often, visual scenario “graphs” are used – branching timelines that show different outcome trajectories for each strategy path.

Strategic Value: Teams move from debate-driven decisions to evidence-based selection of the highest-impact path. It’s much easier to make a call when you have side-by-side projections: Strategy A yields +5% revenue but -2% customer satisfaction, Strategy B yields +3% revenue but improves satisfaction by +4%. Depending on your intent (perhaps you value satisfaction more for long-term brand health), you can choose accordingly. It also uncovers second-order effects; maybe a scenario shows that a short-term gain leads to a long-term downturn due to user fatigue – insights that are hard to intuit but can be caught in simulation. By comparing trajectories, PMs can confidently back their strategic choices to executives and stakeholders with data. It essentially turns strategy into a series of A/B tests run virtually on a model of your business.

4. Multi-Stakeholder Alignment via Shared Simulation

What It Is: Simulation becomes a shared language and canvas for product, design, engineering, marketing, and others. Instead of each function having its own siloed model or opinion (e.g., marketing thinks lowering price will boost volume, engineering worries it will increase load, etc.), everyone gathers around the simulation results, which incorporate inputs relevant to all.

What It Does: Teams collectively explore and critique the same model-driven options, which reduces bias and miscommunication. For example, a simulation might show that a certain feature could drastically increase support tickets. The customer support lead and the PM see this early and decide either to bolster support or tweak the feature to be simpler – before launch. By having all stakeholders interact with the simulation (adjust parameters, see outcomes), everyone’s concerns are addressed in a unified framework. This often involves interactive dashboards or war-game style workshops where each stakeholder can propose actions and see implications instantly.

Strategic Value: Collaboration accelerates because insight is democratized. Instead of arguing from different data sets or experiences, the team works off a single source of “simulated truth.” It builds a culture of objectivity – the model is not owned by any one department, so ideas are judged by merit. It also fosters buy-in: when a strategy is chosen, marketing, sales, engineering, etc., have already seen how it was decided and the data behind it, reducing later resistance. Everyone understands the why, not just the what. In effect, a shared simulation environment aligns the team’s mental model of the project, much like a flight simulator aligns a flight crew on how to handle a flight plan.

5. Early Delivery of Customer Value

What It Is: Using simulation outputs to inform what gets built first and how it’s rolled out, with the explicit aim of delivering value to customers as early as possible. For example, simulations might reveal that a minimal version of Feature X yields 80% of the benefit; thus, the team can prioritize releasing that core first and add enhancements later.

What It Does: Teams start with validated bets, meaning by the time they build something, they already have reason to believe it will matter to users. Simulations can indicate the smallest set of features required to achieve a certain outcome, enabling a more effective MVP. Furthermore, simulation might suggest which user segments will benefit most from a new capability – so the rollout can target them first (perhaps via a beta program), ensuring the initial launch provides real value to a subset of users, who can become advocates.

Strategic Value: Simulation compresses the loop between strategy and value realization. Instead of a long period of development then finding out if value was delivered, you deliver value incrementally and almost immediately, because you chose to implement the pieces that the simulation showed to be high-impact. Customers in turn see improvements sooner – it’s a win-win. It also reduces risk: by the time you get to more speculative or fringe features, you have a base of success and learnings. In agile terms, it’s like having a super-informed backlog where the first items are virtually guaranteed to delight or solve pain points, because you essentially “pre-user-tested” them via simulation.

Together, these capabilities make up a powerful new toolkit for product teams. But capabilities alone don’t create change – how does the role of the product manager evolve in this simulation-led world?

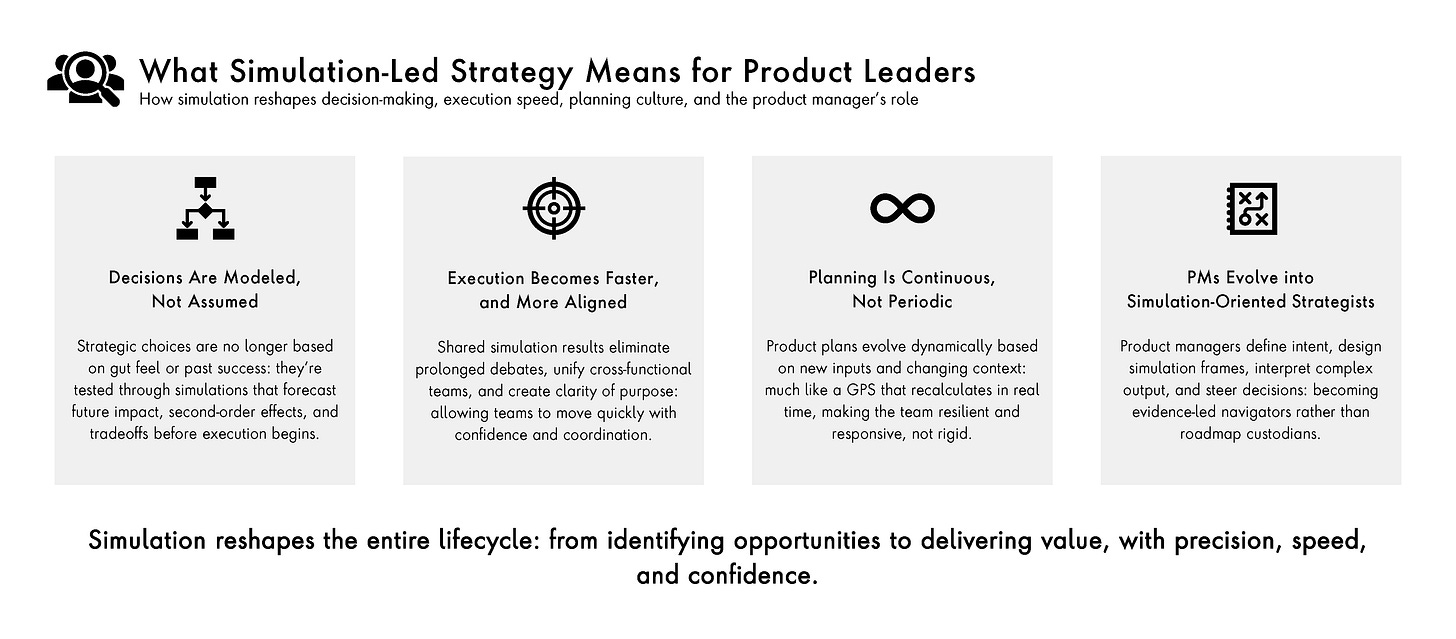

The Evolving Role of the Product Manager in a Simulation-Led World

In a simulation-led environment, the product manager’s job description gains some novel elements. They are no longer just planners and facilitators; they assume roles akin to a futurist and a data-driven storyteller for the product. Key new or expanded hats a PM wears include:

Simulator-in-Chief: Responsible for designing and running strategic “what-if” experiments. The PM decides what scenarios need exploring and interprets the outcomes. For instance, a PM might initiate a simulation of user growth under three different pricing models to inform a pricing strategy decision. They must ensure the simulation is set up correctly, ask the right questions of it, and possibly even tweak the models (in collaboration with data scientists). Not every PM today is versed in simulation tools, but this role is analogous to how PMs adopted A/B testing – you don’t need to code the test, but you need to conceptualize and lead it.

Intent Architect: Similar to what we discussed in orchestration, here the PM defines the goals, boundaries, and success criteria that guide simulations. They ensure the simulations align with the product’s intent and values (e.g., if “improve user wellbeing” is a goal, the PM encodes that so simulations don’t inadvertently optimize for a metric that undermines wellbeing). They basically set the rules of the simulation game.

Evidence Curator: With potentially massive amounts of simulation data and outcomes, the PM must curate and synthesize these into a compelling narrative to drive direction. They distill, “Out of 50 scenarios tested, here’s what we learned: these two strategies consistently led to the best outcomes on our key metrics, and here’s why we believe Strategy A is our choice.” This involves translating complex model output into human-friendly insights for the team and executives.

System Aligner: Ensuring that insights from simulation translate into real-world alignment. The PM makes sure engineering, design, etc., take the simulation results seriously and adjust their plans accordingly. If a simulation suggests a smaller feature would be smarter to do first, the PM negotiates to pivot the engineering plan to that smaller feature. They also ensure that the assumptions in simulation (like how users behave) are aligned with qualitative insights from user research or sales feedback – if there’s a disconnect, they investigate and refine either the simulation or the understanding of the problem.

This evolving role implies a shift in product culture: from “What can we build?” to “What is worth building given where we want to go?”. Product teams begin every discussion with outcomes and evidence, rather than feature wish lists. It also fosters humility and learning – if a simulation shows a beloved idea won’t work, the culture accepts that evidence and moves on, rather than building it anyway due to ego or sunk-cost thinking.

Tools & Frameworks Emerging for Simulation

To operationalize this approach, new tools and frameworks are coming to the forefront. Some examples include:

Scenario Graphs: Visual representations of multi-branch scenarios, basically decision trees of product choices that map out downstream effects. They help teams visualize and navigate complex strategy paths (“If we do X, these three things happen, which lead to these outcomes; if we do Y, different branches…”). These graphs often come with probabilities or impact metrics on each branch, making it easier to compare.

Digital Twins: As mentioned, digital twins aren’t just for physical products or IoT – we now see digital twins of user segments or entire digital ecosystems. For a software product, a digital twin could simulate how “a typical user cohort” interacts with a series of feature changes or how an entire marketplace (like riders and drivers in a ride-sharing product) reaches equilibrium under different policy changes. Digital twins react to inputs in a way that mirrors reality, providing a safe test bed.

Agent-Based Modeling: Simulating the emergent outcomes from many individual “agents” (which could be users, AI bots, sellers in a marketplace, etc.) each following certain rules. This is useful for complex systems – e.g., modeling how information virally spreads among users or how usage might spike and then plateau. It helps in understanding system dynamics that aren’t obvious from a top-down perspective.

Fitness Functions: Borrowed from evolutionary algorithms, these are custom performance definitions that the AI uses to evaluate success in simulations. For example, a fitness function might be a formula combining revenue, retention, and user happiness scores. The simulation will try to “maximize” this function. PMs and leadership define fitness functions to encapsulate what “good” looks like for the product (possibly including ethical dimensions or long-term brand value, not just short-term profit). This ensures the simulated optimization doesn’t go astray – it’s optimizing for what the team truly cares about.

By employing these tools, teams can manage the complexity of simulation. It’s one thing to run a simulation; it’s another to understand and act on it. Visual tools and clear frameworks help digest simulation output.

Strategic Implications

Adopting simulation-led strategy yields several big-picture implications:

Decisions Become Outcomes-Led: Organizations start selecting strategies based on which one promises the best future outcomes, rather than based on past precedent or the highest-paid person’s hunch. This can make companies more bold and innovative – because sometimes a simulation will show an unconventional approach could yield fantastic results, giving leaders the courage to pursue it. It can also prevent costly mistakes by weeding out approaches that look attractive until you see their second-order consequences.

Teams Move Faster with Greater Confidence: Paradoxically, adding an upfront simulation step can speed up the overall cycle. That’s because less time is wasted on dead-end projects or internal debates. Once a decision is made via simulations, execution can be more laser-focused and rapid, since everyone is aligned on why they’re doing it and they’ve pre-tested the idea virtually. It’s akin to measure-twice-cut-once; a bit more effort in planning yields far less rework and hesitation later. One could compare two organizations: one spends 2 months debating then 6 months building something that fails; the simulation-led one spends 1 month simulating, 1 month deciding, and 4 months building the right thing – arriving at success earlier and with fewer scars.

Planning Evolves into Continuous Steering: The role of the roadmap or plan changes from a static contract to a living model. Product planning becomes a continual process of steering based on latest information, much like a GPS that recalculates routes as you drive. This means leadership needs to get comfortable with more fluid plans – you won’t necessarily have a fixed feature list for the next 12 months, but you will have a clear model of your goals and adaptive paths to reach them. It also means product teams must keep simulations and data up to date; strategy work is not one-and-done annually, but ongoing. The benefit is agility and resilience: when something unexpected happens (and in tech, it always does), a simulation-ready team can quickly recalibrate strategy while others might panic or stick their heads in the sand.

In summary, simulation-led product strategy enables companies to navigate complexity with foresight. It’s like having headlights on a dark road – you might drive faster and more safely because you can see what’s ahead, whereas without simulation you’d inch forward cautiously or risk a wrong turn.

As powerful as simulation is, it raises an intriguing next question: thus far, we’ve used AI to advise and model decisions for human-led execution. But what if AI could take an even more active role – not just recommending what to do, but automating decision-making and execution in real-time based on high-level intent? That is the realm of hyper-scaled, intent-driven product systems. In the final article, we will explore a future where product ecosystems manage and optimize themselves towards goals set by humans, i.e., autonomous product systems that act almost like organisms guided by intent. This is the frontier where simulation turns into real-time action, and AI becomes a true partner in product evolution. Simulation has given us power over potential complexity; next, we’ll discuss wielding AI to handle complexity as it unfolds live. Stay tuned for a glimpse into products that think, adapt, and align to purpose on their own – a vision of the not-so-distant future of product management.

References:

[1] McKinsey & Company, Digital twins: The key to smart product development, 2023. [Online]. Available: here

All views expressed here reflect my personal perspectives and are not affiliated with any current or past employer. Portions of this article have been developed with the assistance of generative AI tools to support content structuring, drafting, and refinement.

Previous articles in this series:

Next article in this series: