Hyper-Scaled AI & Intent-Driven Product Systems

Engineering Products that Think, Adapt, and Align to Purpose

Introduction

In the previous article, we explored how AI can simulate and inform product strategy, keeping human decision-makers in the loop with better foresight. Now, we shift our gaze to a bold vision of the future: product ecosystems that can autonomously execute, learn, and self-optimize in real time, guided only by high-level human intent. This is the world of hyper-scaled AI and intent-driven product systems. Here, products behave less like static offerings and more like adaptive organisms or intelligent services that continually evolve.

The role of the product manager transforms again: from orchestrator and simulator to an architect of intent and steward of ecosystems.

In this scenario, AI is not just forecasting decisions (as in simulation) but increasingly making operational decisions on the fly. Consider a product system like an e-commerce platform: an intent-driven version might autonomously adjust prices, reconfigure the user interface, promote different content, or allocate server resources, all in real time, to achieve goals like maximizing user satisfaction and revenue – without a human manually approving each change. The human sets the goals and boundaries, and the AI “runs” the system within those guardrails. This could extend to physical products and IoT as well – imagine a smart home system that automatically learns and implements energy-saving measures aligned with the homeowner’s comfort preferences and cost goals, effectively managing itself.

This might sound futuristic, but elements of it are already emerging. Autonomous AI agents are being experimented with in various domains, and companies like Amazon have systems that algorithmically manage aspects of their business (e.g., supply chain optimization, real-time personalization) with minimal human intervention beyond initial programming. Gartner predicts that by the end of this decade, we will see AI eliminating a significant chunk of middle-management decision-making roles, as “AI will automate scheduling, reporting, and performance monitoring,” letting remaining humans focus on guiding strategy1. The implication for product management is profound: rather than focusing on individual feature decisions, PMs will shape the goals and learning processes of AI-driven systems that handle myriad micro-decisions autonomously.

In this article, we outline the core components of an intent-driven product system, explain what product managers actually do in such a world, and discuss organizational changes needed to support this paradigm. We’ll cover capabilities like intent encoding, autonomous agent coalitions, continuous multi-objective optimization, dynamic ecosystem interoperability, and self-learning systems. These might sound academic, but they break down how a product can effectively run itself in alignment with business and user goals. We’ll also address how companies maintain control and trust in these AI-guided systems – through governance models, ethical frameworks, and new roles such as AI product managers and system ethicists. Finally, we’ll tie this all back to the journey we’ve been on: from infusing AI in the product process (Article 1) to orchestrating systems (Article 2) to simulating strategy (Article 3), and now to products that are AI-native at their core – the culmination of the evolution toward AI-native product ecosystems.

From Strategy ➝ Self-Governance (Intent-Guided Systems)

The fundamental transformation in this stage is moving from human-driven planning to AI-driven self-governance based on human-defined intent.

Previously, even with simulation, humans made the final calls and then implemented changes. In an intent-driven system, humans specify what the system should strive for (the intent and constraints), and the system itself figures out how to adjust and improve continually to fulfill that intent. It’s akin to setting a charter or constitution for the product and letting an AI “government” run day-to-day operations under that charter.

Concretely, this means encoding things like: desired user outcomes (e.g. “minimize user effort to accomplish key tasks”), business goals (e.g. “maximize subscription renewals ethically”), and values/constraints (e.g. “never sacrifice data privacy”, “maintain response time < 200ms”). The AI uses these as North Stars and boundaries. It then monitors everything (telemetry, user behavior, environmental signals) and makes adjustments to the product – possibly releasing updates, reconfiguring features, reallocating resources – in pursuit of those encoded goals.

A simple early example is personalization algorithms that continuously tweak themselves for engagement; the next level is doing this across all aspects of the product experience and not for a single KPI, but a balanced set of goals.

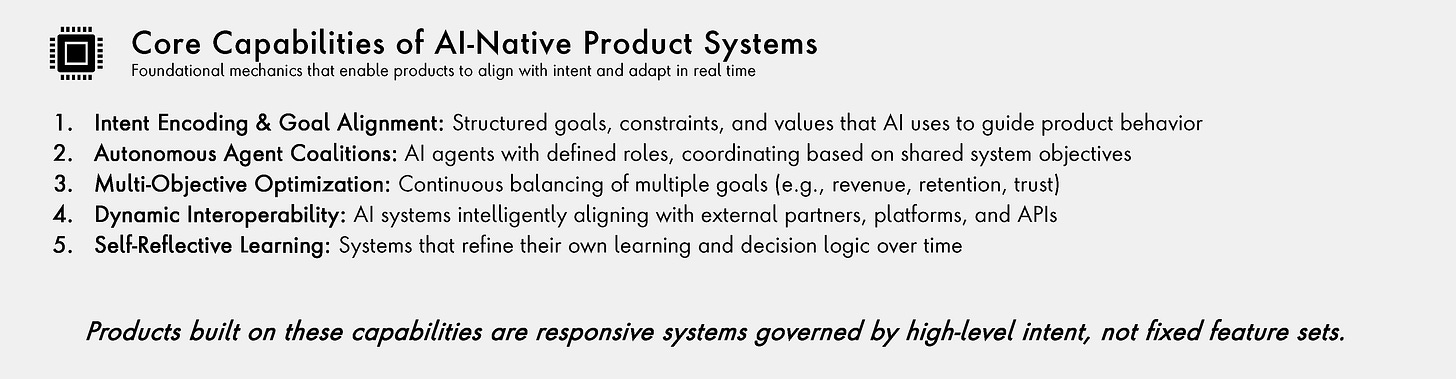

Let’s break down the Core Capabilities of Intent-Driven Product Systems:

1. Intent Encoding & Goal Alignment

What It Is: Capturing the product’s purpose, desired outcomes, and guardrails in a format that AI systems can understand and use for decision-making. This is typically a structured model of intent: it could be a set of weighted objectives (e.g., “maximize retention (50% weight) + revenue (30%) + user trust score (20%)”), plus constraints/ethics (e.g., “don’t target content in manipulative ways”, “ensure fairness across user groups”), and any specific hard goals (e.g., “achieve at least N users by date D”).

What It Does: These intent models are translated into optimization objectives that guide AI agents throughout the product. In effect, the AI has a “mission function” it’s always trying to maximize or satisfy. For instance, an AI managing a social network’s content feed might have an intent encoded to maximize meaningful interactions while minimizing misinformation and ensuring diversity of content. That intent drives the algorithms governing the feed ranking, content moderation AI, etc., aligning all of them to the same ultimate goals rather than isolated metrics.

The product evolves around the purpose it’s meant to fulfill, not just a list of features. This is key: features become malleable means to an end. If the intent is well-specified, the system might even retire or create new micro-features on the fly if it finds a better way to fulfill the intent.

The product becomes less a fixed set of features and more an evolving solution orbiting around user needs and business outcomes.

2. Autonomous Agent Coalitions

What It Is: A network of AI agents operating independently across different domains of the product, such as UX personalization, pricing, content recommendations, fraud detection, etc., which collaborate with each other as needed. Each agent has a specific role or domain of expertise but they share the common intent and can communicate outcomes.

What It Does: These agents handle their respective areas (for example, one agent might continuously tweak the UI for each user, another manages back-end infrastructure scaling, another adjusts marketing offers) and they coordinate among themselves based on system-wide priorities. For instance, if the pricing optimization agent notices users leaving due to price, it might signal the UX/content agent to show a discount or inform the engagement agent to boost value-adding content to justify the price – all autonomously. They can escalate or defer to each other: e.g., an AI content moderator might “decide” to escalate a borderline content issue to a human if it conflicts with an ethical rule, or a performance optimization agent might throttle a new feature rollout if the stability agent detects rising errors. The PM does not script these day-to-day interactions; rather, the PM sets the rules of engagement and the agents figure out cooperation (akin to an ant colony where each ant has a job but they work in concert through signals).

PM’s Role: Instead of writing user stories, the PM now defines interaction logic and escalation paths among agents. For example, the PM might specify that if the “user trust” metric dips below a threshold, any experiments by other agents are put on hold and a human review is triggered. Or define that the revenue optimization agent cannot override certain user experience decisions if they conflict with a critical usability metric without sign-off. Essentially, the PM programs the principles of teamwork for these AI agents.

Strategic Value: This multi-agent approach allows a product system to manage complexity at scale. Each agent focuses on one aspect, which is more tractable, yet the overall behavior emerges from their interactions. It’s scalable because you can add agents for new concerns (say accessibility improvements) without overloading a single monolithic AI. It is also robust: if one agent fails or hits a limit, another can compensate (within the coordination rules). This is very much like microservices architecture, but for AI decision-making.

3. Continuous Multi-Objective Optimization

What It Is: Instead of optimizing for a single KPI (like engagement or revenue in isolation), the system can balance multiple objectives simultaneously in real time. This is crucial because real products have to consider trade-offs – for instance, maximizing ad revenue might hurt user experience, so you want a balance. The AI doesn’t just have one target; it has a vector of targets and it seeks an optimal equilibrium among them as conditions change.

What It Does: It makes dynamic trade-offs guided by the intent model’s weights and constraints. For example, the system might temporarily allow a slight dip in short-term revenue if it detects that user satisfaction is dropping, to prioritize long-term retention – because the intent model knows retention is more valuable long-term. If one objective (say system performance) is at risk due to a surge in traffic, the system might reduce the priority of, e.g., an image enhancement feature to ensure speed stays acceptable, thus balancing “feature richness” vs “speed” objectives on the fly. These decisions happen continuously and often invisibly to users.

Trade-offs happen dynamically: one could imagine a live dashboard where at any given second the AI has allocated a certain “focus” to each objective, shifting as needed. It’s like an autopilot adjusting flaps, throttle, etc., to keep the plane on course, except the autopilot is balancing many goals (altitude, speed, fuel efficiency, comfort) all at once.

Product evolution becomes non-linear and adaptive: The system might find creative solutions that a human linear roadmap wouldn’t, such as reducing friction in one area to allow a stricter control in another that overall yields better outcomes. No more one-size-fits-all feature releases – different users or contexts might experience slightly different behaviors of the product tuned to optimize their experience and the business goals at that moment, all consistent with the high-level intent.

4. Dynamic Ecosystem Interoperability

What It Is: The product doesn’t exist in isolation. In a hyper-connected world, products are nodes in broader ecosystems – integrating with partner platforms, utilizing external data, and interacting with other services. Dynamic interoperability means the product’s AI system can interact with external systems or APIs intelligently and align with them on shared intents or protocols.

What It Does: Intent models can span organizational boundaries for partnerships or larger goals. For example, think of a health app that interacts with hospital systems and insurance databases – an intent-driven approach might allow it to align on a broader goal like “improve patient health outcomes while reducing costs”. It could adjust how it nudges user behavior if it knows the healthcare provider’s systems are doing certain interventions, coordinating to avoid duplicating effort or conflicting advice. On a technical level, the product’s AI agents may consume external AI services (like a translation service, or a climate data service) and adapt the product’s behavior accordingly.

Shared goals across systems could be something like an electric vehicle’s charging software aligning with the smart grid’s sustainability objectives – the car might choose to charge at times when renewable energy is abundant, benefitting both the user’s cost and the grid’s stability. The product’s AI would negotiate or sync with the external signals (e.g., grid’s price signals, or a partner platform’s recommendations) to adhere to an overarching intent (like ESG impact, in this case).

Interoperability becomes a competitive advantage. Companies that can seamlessly integrate their AI-driven product with others can deliver composite value that single products can’t. It also future-proofs the product – new integrations can be accommodated by the AI if aligned intents are provided, without heavy re-engineering. Strategically, it shifts competition from product vs product to ecosystem vs ecosystem. If your product can plug into a user’s entire digital life harmoniously, it’s far more resilient and valuable.

5. Self-Reflective & Meta-Learning Capabilities

What It Is: This is AI getting meta – the system not only learns about the domain (users, usage, etc.) but also learns how to learn better over time. It’s an almost human-like self-improvement loop: the AI monitors its own performance as a “learner” and optimizer, and refines its methods accordingly.

What It Does: The system might adjust its own algorithms, retraining frequency, or data pipelines based on what yields better predictions or decisions. For instance, if the AI notices that its user satisfaction predictions are often off in a certain scenario (maybe during holidays, user behavior changes), it can adjust by either seeking new data (perhaps incorporating social media sentiment data during that period) or changing the weight it gives to certain inputs during that time. It can also detect concept drift – e.g., the definition of “engaged user” might evolve if the product adds new features – and update its models to match the new reality.

Essentially, the AI system has a feedback loop for its own learning process. It might even simulate variations of its algorithms and choose a better one (like AutoML doing model selection). This reduces degradation of performance over time and keeps the product’s intelligence fresh.

PM’s Role: The PM (with ML engineers) ensures there is a framework for this self-reflection that is ethical and bounded: e.g., the system can’t decide to learn from user data in a way that violates privacy. Also, ensuring it doesn’t over-optimize itself in a way that humans can’t understand.

Strategic Value: The product doesn’t hit plateaus of improvement and can respond to unknown unknowns. It’s one thing to adapt to known types of changes; self-learning helps with novel situations because the system can experiment with new ways of adapting. It also lowers maintenance costs – the AI can fix certain issues itself (like a model that’s losing accuracy) without waiting for a human to notice and retrain it. Overall, the product becomes more autonomous in improving itself, truly like a living organism that not only adapts to survive but also adapts to adapt better in the future.

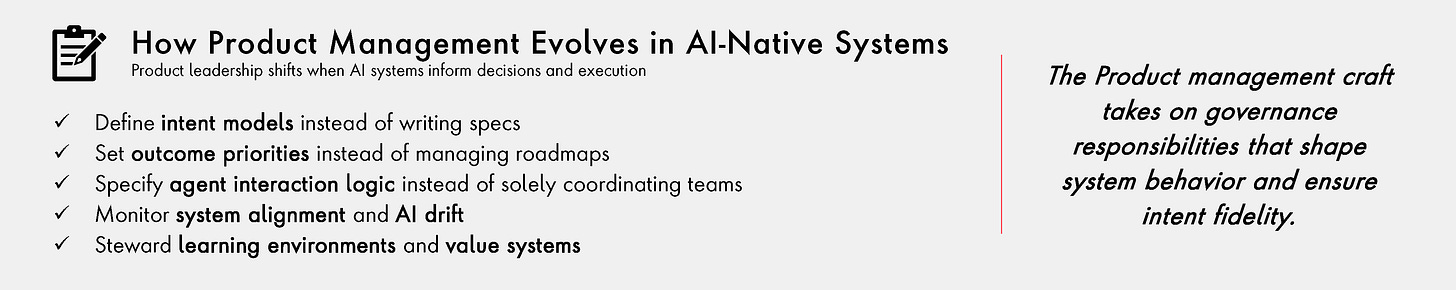

With these capabilities, one can envision a product that is largely self-managing. But where does that leave the product manager and their team? Let’s illustrate how the legacy tasks compare to intent-driven tasks for PMs:

Legacy PM vs. Intent-Driven PM:

Writing detailed feature specs is replaced by encoding goals and constraints. The spec becomes a higher-level document of what the system should achieve and the rules it should follow, rather than how to implement features line by line.

Prioritizing backlogs turns into defining multi-dimensional optimization functions. Instead of ordering features, the PM sets priorities among outcomes (e.g., how to balance growth vs. profitability vs. user satisfaction). The “backlog” in a sense is managed by the AI – it picks what to do next in pursuit of these goals.

Coordinating teams is replaced by designing agent interaction models and ecosystem protocols. The PM ensures that if one AI agent (or team) does something, others know how to respond – essentially establishing contracts and communication channels not just for human teams but also between AI components and external services.

Owning a roadmap changes to monitoring goal alignment and system drift. The PM constantly checks if the product’s autonomous behavior is staying true to the intent (goal alignment) and whether the AI is drifting (maybe optimizing something incorrectly or missing context). They intervene if the system starts going astray or if the intent needs to be updated due to a change in business strategy.

Driving execution shifts to stewarding long-term value systems and learning environments. This means the PM looks after the overall “health” of the AI product system: is it learning the right things, is it fair, is it sustainable, does it continue to deliver value to users and business over time? They focus on nurturing the system rather than pushing tasks.

In a way, the product manager becomes a governance architect. They ensure that the autonomous system is doing the right things for the right reasons. Their job is less about day-to-day decisions and more about setting up the system to make good decisions and then supervising that it remains on track. It’s analogous to moving from being a driver to being the city planner who designs the traffic system – you’re not driving each car, but you set the rules of the road, install traffic lights, and ensure safety mechanisms.

Organizational Implications

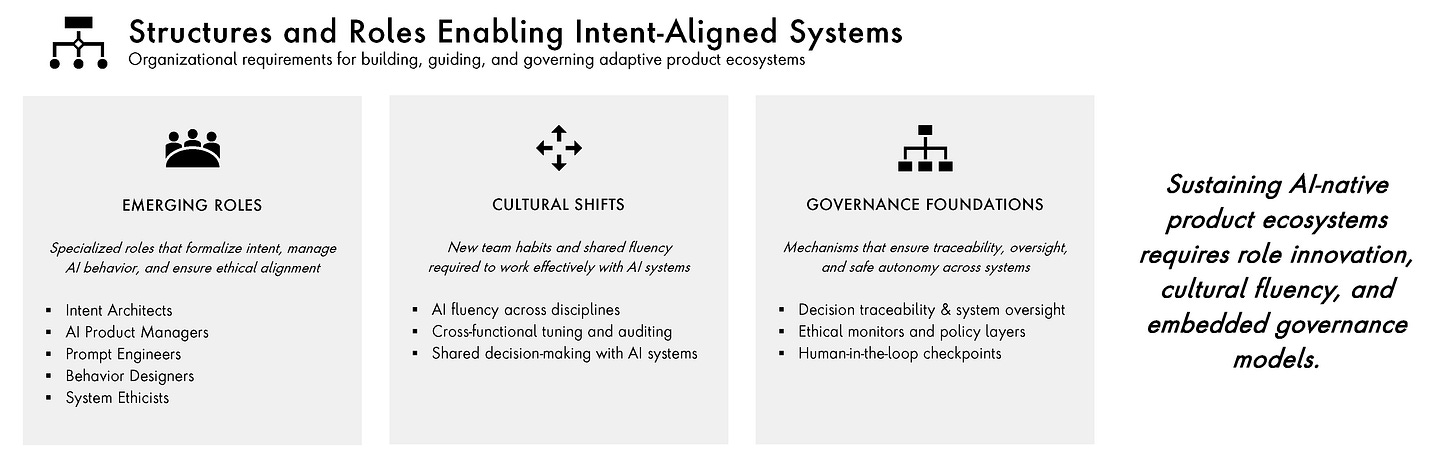

Achieving intent-driven product systems is a deep organizational challenge. It requires new skills, roles, and mindsets within the company:

New Roles & Capabilities

To manage such AI-native products, organizations are already seeing the rise of roles like:

Intent Architects: These could be considered a specialized type of product strategist or business analyst who translates high-level business and customer goals into formalized intent models that AI can work with. They might have expertise in decision theory or systems engineering, working closely with PMs to codify goals and constraints. In smaller companies this might just be part of the PM’s job, but in larger ones, dedicated experts ensure the intent model is robust and comprehensive.

AI Product Managers: While today all PMs are starting to become “AI product managers” in some sense, this refers to PMs who specifically focus on products that are themselves AI-driven, managing AI behavior rather than feature lists. They need to understand AI capabilities and limitations deeply and often partner with data science teams. They ask questions like: “How do we measure success for this algorithm in human terms? Does this autonomous feature truly serve the user’s intent?”

Prompt Engineers & Behavior Designers: As AI agents become more prevalent, people who can craft the right prompts (for LLMs, for example) or shape the interactive behavior of AI (how it converses, how it responds to novel inputs) are valuable. In intent-driven systems, maybe you have an LLM agent that interacts with customers or generates content – prompt engineers would help tune how that agent behaves to align with the brand and goals. Behavior designers might define how various agents escalate issues to humans or how they present their actions (so users understand and trust them).

System Ethicists: When AI is making many decisions autonomously, ethical considerations multiply. System ethicists or AI ethics leads ensure the AI’s actions remain within moral and regulatory bounds. They might develop ethics checklists for AI changes, audit algorithms for bias, and design interventions (or constraints in the intent model) to uphold fairness, transparency, and compliance.

This array of new roles underscores that product development becomes an interdisciplinary exercise involving not just traditional software engineering and UX, but also data science, AI policy, and more.

Culture of AI Fluency

For an organization to successfully run intent-driven product systems, it needs broad AI literacy. It’s not enough to have a few experts; product managers, designers, engineers, marketers – all need a working understanding of how AI works in their context.

Cross-functional Training: PMs and designers learning how to interpret model outputs or identify AI biases; engineers learning how to integrate AI components and monitor them; marketers understanding what AI personalization can/can’t do. When everyone has some AI fluency, teams collaborate more effectively with the AI in the loop.

Working with AI: Teams need to become comfortable treating AI as teammates. For instance, a designer might regularly consult an AI design generator for inspiration and then refine the results, or a support team works alongside an AI chatbot, supervising it. This shifts roles but can augment capabilities significantly.

Auditing and Tuning AI: All roles might share responsibility in keeping AI on track: e.g., a content strategist may notice the AI promoting the wrong kind of content and flag it. So understanding how to “speak to” the AI system (through feedback or adjustments) becomes part of the job. This could involve frameworks for interpretability: teams collectively use dashboards that explain why the AI did X, so they can trust and refine it.

Importantly, decision-making becomes more shared. Instead of a PM unilaterally deciding, say, to roll back a feature, the team collectively interprets AI-driven insights (like a drop in a metric, or an alert from an ethical monitor) and agrees on adjustments, often by tweaking the intent model or constraints rather than debating a feature’s fate in isolation.

Governance Models for AI-Native Products

As autonomy rises, oversight and accountability become critical:

Decision Traceability: The organization needs ways to trace what decisions the AI made and why, especially when those decisions have significant user impact. This might mean logging the rationale or key signals behind autonomous changes, so later one can audit if it was appropriate. For instance, if an AI in a fintech app changed a credit-scoring criterion autonomously, there must be a record and reason that can be reviewed. This traceability builds trust and is often necessary for compliance.

Policy Layers & Ethical Monitors: Companies will layer additional systems to watch the AI. You might have automated checks (another AI or rule system) that monitor for policy violations – e.g., if the product’s AI experiment causes a spike in negative sentiment, a monitor could alert or stop that experiment. Or an ethical AI module that scans decisions for potential bias (like noticing if an algorithm’s change disproportionately affects one demographic). These act as a check-and-balance to free-running optimization.

Human-in-the-Loop Mechanisms: Even in highly autonomous systems, there will be choke points where human approval is required – especially for irreversible or sensitive actions. For example, an AI might identify users to ban for fraud, but perhaps it flags them for a human to review before final action. Or in product changes, maybe major UI overhauls still require a design lead’s sign-off even if AI suggests them. Over time the threshold for human involvement might shift as trust in AI grows, but a governance framework will define where that line is at any time.

Strategic and Ethical Alignment as Differentiator: Companies that can assure users and regulators that their AI is under control and aligned with human values will have an edge. Trust is paramount – if users know the product is largely AI-driven, they need to trust the brand’s AI to treat them right. That’s why things like publishing AI principles, having external audits, and being transparent about AI use become part of product strategy. It’s not just a compliance issue; it’s about brand trust and user comfort.

In essence, as AI becomes a partner in product evolution, governance and ethics move from the periphery to the core of product strategy. It’s not an afterthought; it’s designed into the system (recall we encoded ethics into intent from the start).

Strategic Implications

Finally, zooming out, what does this all mean for the industry and product leadership?

AI Executes, Humans Guide: We’re reaching a point where execution can be largely automated by AI, and human contribution shifts to guidance and purpose. Product teams in the future will be smaller (as Marty Cagan predicted, teams shrinking significantly2) and focused on defining the right problems and interpreting the outcomes, rather than doing all the legwork. This flips organizational hierarchies and value – those who can define vision and intent (and empathize with users to set the right goals) become even more valuable, while layers of coordination middle-management may diminish. It echoes what we saw from Gartner about reducing middle management with AI3 : companies might restructure, with leaner teams empowered by AI getting more done than large teams before.

Systems Evolve, Not Ship: The mentality shifts from launching products to growing products. A product becomes an evolving service that learns and changes continuously without the concept of versioned releases. This means companies need to think in terms of life-long product adaptation. Investment and KPIs may shift to those that capture long-term improvement, like lifetime customer value or learning efficiency, not just launch metrics. This could also change how companies charge or deliver updates (maybe more subscription models or continuous value delivery guarantees).

Ambient, Agent-Mediated Collaboration: Within organizations, as more agents (bots) handle tasks, human collaboration also changes. Teams might “consult” AI agents in meetings as if they were team members. Workflows become more asynchronous and mediated by AI – e.g., an AI summarizer preps meeting notes and highlights decisions needed, team members give input, another AI collates it and actually triggers certain actions. So coordination becomes ambient – things happen (scheduling, reporting, etc.) without direct human coordination because the AI takes care of it. People work together through shared dashboards and AI-managed processes rather than through emailing and manual updates. This can increase efficiency but also requires trust in these agents.

Ethics, Feedback, and Resilience as Core Design Principles: When products self-optimize, ensuring they do so in a way that’s ethical and sustainable is paramount. Thus, design principles now include things like “built-in ethical review” or “feedback loops for stakeholder input.” The product team not only designs for user delight but also for transparency and fairness outcomes. For example, a principle might be that any autonomous change visible to a user should be explainable to that user if they ask (“Why did I see this price?”). This kind of thinking becomes part of product requirements. Also, resilience – the system should handle unexpected events gracefully (like not catastrophically failing if an external API goes down or if a weird user behavior confuses the AI). That leads to things like fail-safes where AI can recognize it’s out of its depth and alert humans.

All these implications underscore that the role of product leadership is shifting from crafting products to crafting the systems that craft products. It’s one more level of abstraction out.

The Dawn of AI-Native Product Ecosystems

Over these four articles (1, 2, 3 and 4), we’ve journeyed from the initial infusion of AI into product development to a vision of products as autonomous, intent-driven ecosystems. Let’s briefly recap the progression:

AI-infused (Article 1): We began with traditional teams supercharging their work with AI: faster development, richer insights, leaner teams. This set the foundation by automating grunt work and speeding the cycle.

Strategically orchestrated (Article 2): Next, we saw the PM role evolve to orchestrating complex, AI-augmented systems, focusing on strategic alignment and data-driven collaboration rather than manual coordination. The team became a symphony guided by insight.

Simulation-led and adaptive (Article 3): Then, we introduced proactive strategy, using AI to anticipate and test the future. Decisions moved from intuition to evidence, making organizations more confident and agile in setting direction.

Autonomous, intent-aligned ecosystems (Article 4): Finally, we envision the product itself becoming an AI-driven entity, adapting in real time to user needs and goals defined by humans, with the PM as an intent steward.

These shifts are already underway in the most adaptive organizations. We see glimpses of it in how Netflix’s algorithms personalize content, how fintech startups use AI assistants to handle customer service or fraud, how Amazon famously simulates changes in their fulfillment network, and how autonomous vehicle systems make decisions on the road. Each piece of this puzzle is being built in various industries. The challenge ahead for product leaders is not whether to adopt AI – that’s a given, as those who don’t will be left behind – but how to do so in a way that maintains relevance, trust, and value in a world where AI is not just a tool, but a partner in the product’s evolution.

To thrive in this future, companies must rethink their talent, processes, and values. They must invest in training and hiring for AI fluency, establish cross-functional teams that include AI systems as members, and engrain ethical considerations into every step of product development. Product managers and executives should start experimenting if they haven’t: introduce AI into one aspect of the lifecycle, try a simulation for a major decision, pilot an autonomous feature with safety nets. Build comfort and competence progressively.

Ultimately, embracing AI in product management is about amplifying what makes products great – solving real problems for people – by leveraging a power of scale and intelligence we’ve never had before. AI-native product ecosystems have the potential to deliver hyper-personalized experiences, unprecedented reliability and efficiency, and continuous innovation. Imagine products that anticipate needs and fulfill them almost instantly, or services that adapt to each user’s unique context seamlessly. That’s the promise on the horizon.

As we conclude this series, one thing is clear: the future of product management will not look like the past. It’s a future where creativity, strategy, and empathy remain irreplaceably human, but they are executed through and alongside incredibly powerful AI systems.

The winners in this new era will be those who can effectively marry human insight with machine intelligence: crafting visions and cultures where AI drives routine decisions and actions, and humans focus on vision, purpose, and ensuring technology truly serves people.

This symbiotic relationship between PMs and AI will unlock innovation at hyper-scale. It’s an exciting time to be in product leadership: the tools at our disposal are evolving rapidly, and so too must our approaches. Those who lean in and help shape these AI-powered approaches will lead the way in creating products that not only meet needs better than ever before, but do so in ways that are adaptive, ethical, and deeply integrated into the fabric of how we live and work.

The journey from AI-assisted to AI-autonomous products is underway, and it heralds nothing less than a new paradigm for how we envision, build, and grow products in the coming decade.

References:

[1, 3] SHRM, Transforming Work: Gartner’s AI Predictions Through 2029, 2024. [Online]. Available: here

[2] Silicon Valley Product Group, A Vision For Product Teams, 2025. [Online]. Available: here

All views expressed here reflect my personal perspectives and are not affiliated with any current or past employer. Portions of this article have been developed with the assistance of generative AI tools to support content structuring, drafting, and refinement.

Previous articles in this series: